One of the common challenges DevOps teams encounter after implementing Infrastructure as Code (IaC) is: “How do we manage and orchestrate applications on our newly provisioned infrastructure?”

env0 is a CI/CD platform for IaC tools (Terraform CLI, OpenTofu, Cloudformation, Pulumi, etc.), and we’ve been helping customers provision the network, compute, and service layers.

As we do that, we constantly find ourselves dealing with different setups and applications of all shapes and sizes: serverless, containerized, or monolithic.

In this post, I’ll dive into one of these use cases and show how env0 streamlines application management by integrating with ArgoCD, a popular application deployment tool for Kubernetes used by many of our customers.

Where does env0 end and ArgoCD begin?

To set the tone, let's start with a diagram of a simple 3-tier application.

In the case of containerized apps in K8s (whether it's Helm charts or K8s manifests), we are going to be deploying the core infrastructure—the network and compute layers—using env0, then have ArgoCD manage the application layer.

How env0 makes application management and orchestration easier

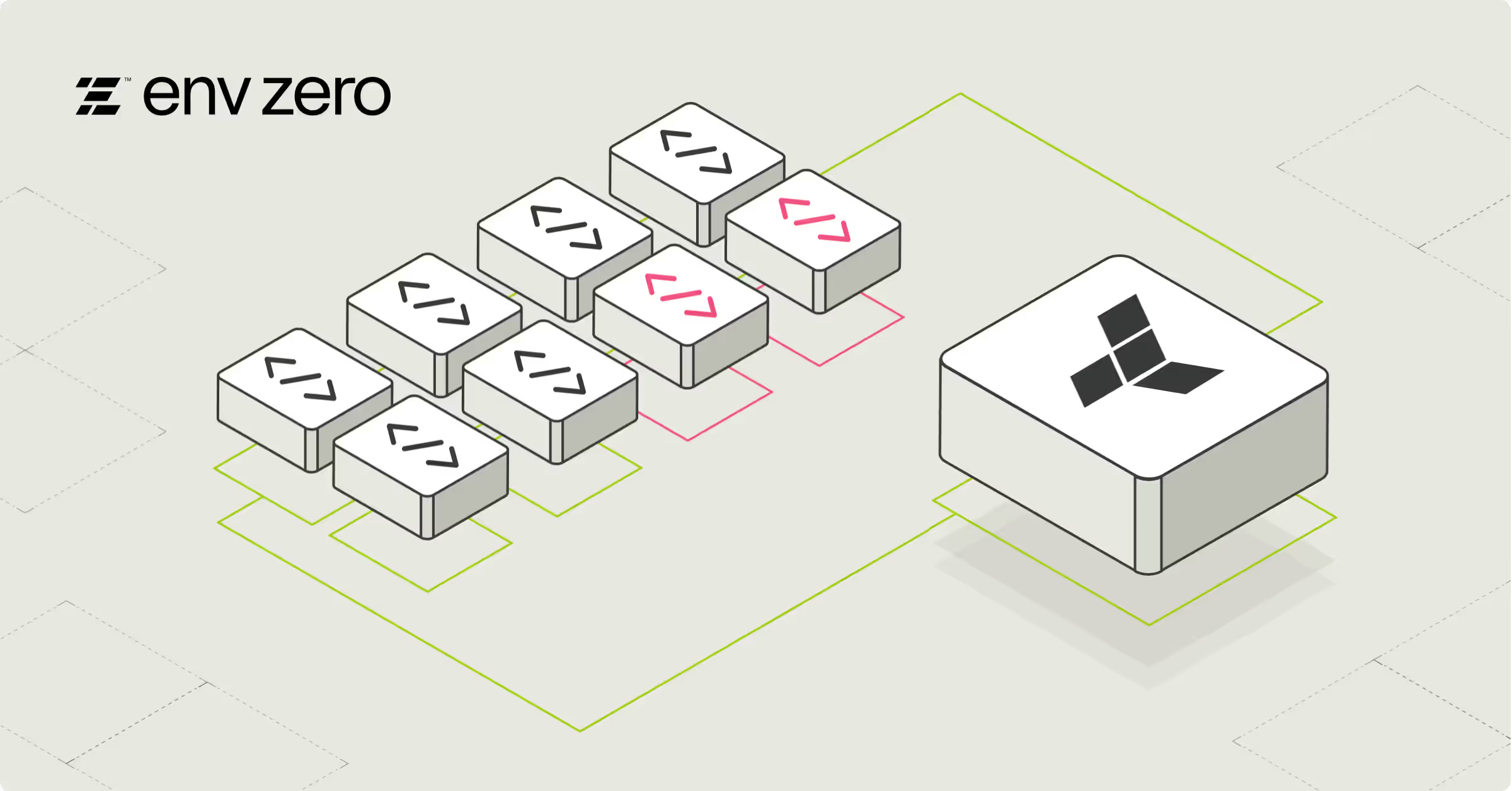

What’s not shown above is how one installs and configures ArgoCD into the K8s cluster. So let’s expand the diagram and then walk through what benefits you get from env0 with this model:

First (1) we use env0 to install ArgoCD (via a Helm chart or Kubernetes manifest), with env0 Helm Chart support. As a platform team, you can easily spin up a new cluster and install ArgoCD (and other required services like a cert-manager, ingress controller, and/or a metrics service).

Secondly (2) we take advantage of ArgoCD’s declarative Application CRD to install apps through a K8s manifest. Argo will read the configuration file(s) and install the app(s), thus managing the application lifecycle.

What are the benefits of using ArgoCD and env0?

Not only can you now configure your application delivery platform in a consistent manner and at scale by utilizing env0 to install ArgoCD and the applications it manages, but you can also take advantage of env0's built-in RBAC, policy controls, TTL/scheduling configuration, and templating capabilities.

If your end-user requires a specific application or service in their Kubernetes cluster, they can provision it using our self-service management features.

Video Tutorial: Implementing ArgoCD with env0

Transcript

----------------------------------

Hello, env0 user. My name is Andrew, and today I want to show you the env0 and ArgoCD integration. So what are the benefits of integrating env0 with ArgoCD? One is you get a codified platform for application deployments. And what do I mean by that? Well, on env0, you already are deploying your infrastructure with Terraform or Pulumi or CloudFormation.

So you're setting up your network and compute layer. But now customers also want to manage the ArgoCD installation, as well as the applications behind that. So with env0’s Helm support or Kubernetes manifest, you can install ArgoCD through your env0 pipeline. And what that means is you – as a platform engineering team or a DevOps team – you can now codify the entire application delivery process.

So you get your network layer, compute layer, as well as Argo, your application delivery tool, as all in code, for a repeatable deployment method for your application teams. What that also gets you with env0 is the ability to provide self-service for your applications. ArgoCD has an application CRD that you can deploy through env0.

So, by deploying those application CRDs within env0, you can now introduce access control—so approval workflow, approval policies through that deployment process. You can introduce ‘time to live,’ so your applications, whether they're dev tests or ephemeral, you can have a ‘time to live’ setting on it, so those applications will self-destruct and not consume those Kubernetes resources.

Then you can also introduce best practices, essentially an easier way for your development team to deploy common applications that they might need in their Kubernetes cluster and have them self-service that.

Let's take a look at the deployment flow and what that looks like.

So again, I mentioned earlier that you can deploy your network layer already and compute layer already with env0. With env0's Helm chart and our Kubernetes manifest, you can now deploy ArgoCD as part of that process; and then to add on top of that, using ArgoCD's application CRD, you can deploy the application metadata essentially to let ArgoCD then manage the actual delivery of that application within the Kubernetes cluster.

Let's see that in action.

So here in env0, I've already deployed a Kubernetes cluster and I've installed Argo onto that cluster. So if I come into my Argo UI admin interface, you can see here, it's just vanilla Argo with no applications installed just yet. Let's take a look at the Kubernetes cluster itself.

If I do a [.code]k get namespace[.code] ([.code]kgns[.code]) here, you can see I have Argo.

[.code]k get pods[.code] ([.code]kgp[.code]) and namespace Argo ([.code]-n argo[.code])... Cool.

And let's get pods in the metric server. ([.code]kgp -n metrics-server[.code]).

Okay, so nothing exists. So if I try to do [.code]k top pods[.code] ([.code]ktp[.code]) right now... No, the metrics API is not available. Let's go fix that.

In env0, I already have this metric server deployed previously. I'm going to redeploy it now.

And while that's running, I'll show you what it's doing behind the scenes. So essentially what it is, is again, a Kubernetes manifest. Let's go take a look at that manifest. This is the application YAML manifest, which is again, an Argo CRD. And I'm deploying the metric server. The most important part here is the source.

This is a Helm source. So I'm deploying version 3.11 of the metric server. And if I go back to env0, you can see that it already finished deploying. And if I go to Argo on a refresh, it is now progressing on the installation of the metric server. And in a few seconds… there you go. It's healthy now.

Cool. So let's go back into my Kubernetes cluster. [.code]k get pods[.code] in the metric server ([.code]kgp -n metrics-server[.code]). The metrics service is now running.

Now, if I do [.code]k top pods[.code], all names ([.code]ktp -A[.code])... this is… awesome. Now, I can track how much CPU or memory each of these pods is consuming to give me a better understanding of anything that's going wrong in my Kubernetes cluster.

So just to recap, what I've shown you so far is env0 deploying—env0 deploying ArgoCD through the Helm chart, as well as env0 deploying the application CRD.

This integration now gives you the ability to control the application delivery process through env0 if you find that useful. Please feel free to reach out if you have any questions and look forward to hearing from you.

Cheers.

.webp)